This document is part of a developing theoretical framework authored by Christopher Lee Burgess.

Abstract

Classical variance is insufficient for characterizing the geometric structure of multimodal data. In this work, we will define a new quantity called the pseudovariance, and demonstrate how it captures shape, modality, and dispersion through a general class of functions we call “forma”. By contrasting this novel interpretation of variance with classical variance, we reveal how non-localized behavior forces us to tweak the traditional axiomatic framework. From this, we will provide a closed analytic form for multimodal distributions and demonstrate how it corresponds with other members of the exponential family. Finally, we will explore the rich connections between pseudovariance and unimodal moment behavior.

Key Words: Pseudovariance, Multimodal, Variance, Analytic Form.

Introduction

It is sort of ironic, that there has been significant variation in our formulation of variance in many chapters of statistical lineage. For now, we will focus on the one-dimensional case. If we define the mapping for variable

and parameter

in

, this classical variance can be described with the following formula:

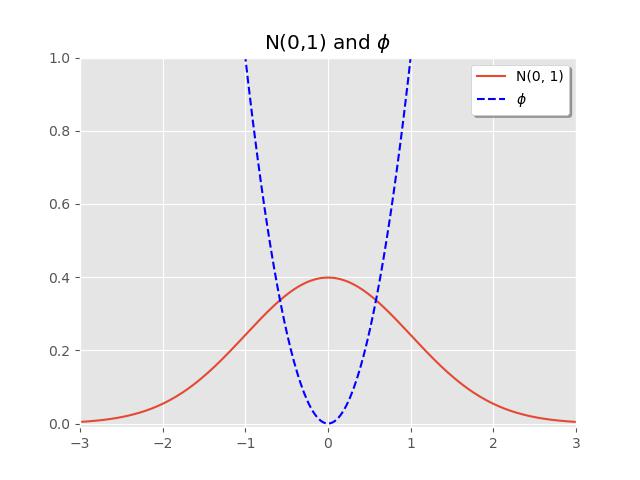

where the parameter is the mean of our data. The graphic below illustrates

‘s contribution to our classical measure:

From this, we can interpret that the farther out a sampled data point is from the mean, which is zero in this case, there is a higher contribution

to our variance quantity measure.

Historically classical variance has been shown to satisfy a certain set of preliminary axioms and assumptions, enumerated below:

Axiom 0

If our random variable , for some constant

, then

.

Axiom 1

(Positivity)

If , then

.

Assumption 0

(Localization Invariance)

For any constant , we have the property

.

Assumption 1

For any constant , we have the property

.

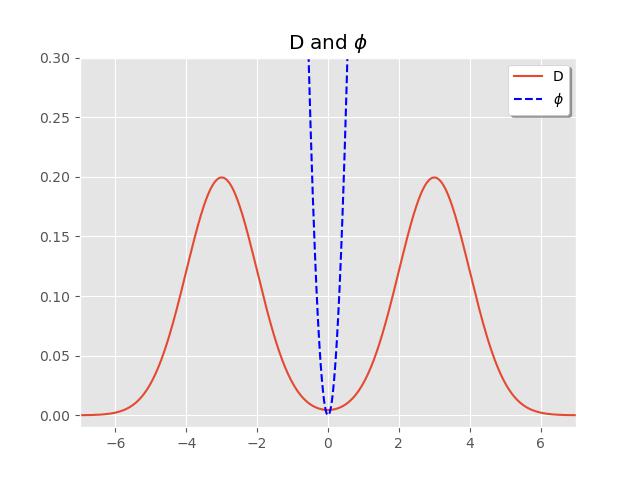

However, one key point of this article, is that classical variance inherently assumes a unimodal central tendency and does not fully characterize the spread for multimodal distributions. To provide an example, we define the distribution . If we continually sample from

, the equal weighting of Gaussians

and

would grant us a sample mean that converges to zero, but clearly

doesn’t adequately capture the concentration of data around the modes:

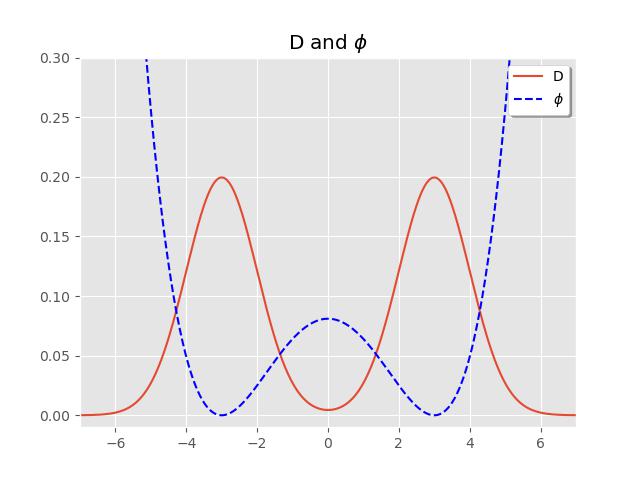

On first glance, given the shape of this bimodal distribution, we can guess that , for some positive constant

. From this we observe more robust contributions to dispersion for

:

We can see how, by letting be of this form, our pseudo variance violates the localization assumption above. Later in our research, we will find that this not a bug, but a feature of this generalization. However, it is important to first formalize our definitions in order to gain a richer understanding about what is being conveyed here.

On Pseudovariance

To formalize these concepts related to pseudovariance and multimodal distributions, I’ll first introduce a few definitions:

Definition 1 (Pseudovariance) Given some distribution over random variable

and positive semidefinite function

, the corresponding pseudovariance is as follows:

And we will refer to as the forma function, since “forma” is latin for “shape”. Later we will see it is important to choose forma

, such that

satisfies axioms 0 and 1 from our classical variance. Essentially this definition relaxes the assumptions stated above.

Definition 2 (Pseudocovariance) Let be a probability distribution over the random variable

, and let

be a positive semidefinite, measurable function. Then the pseudocovariance of

and

with respect to

is define as:

Definition 3 (Local Minimum) For any smooth function , we say the point

achieves a local minimum

if and only if

and

.

Definition 4 (Pseudomean) Given any pseudovariance over

, define the set

, and every

will be called a pseudomean of

.

So for example,

In the standard unimodal case, we let , and thus

.

Or in the standard bimodal case, where if we let , we can show

.

In this treatise, we might later consider those critical points of the forma function which are not local minima, but for now let’s move into moments.

For any positive integer , let

be the

order antiderivative with all constants of integration equal to zero. Then the definition of the

order moment under this framework is as follows:

Definition 5. (Moments) Let be any distribution over the random variable

, and

be our forma function. Then the

order moment is given by the equation

So in the classical framework, by letting our forma function be , we know that

. Therefore, our third order moment can be expressed like so:

which aligns with the classical interpretation of the order moment. Throughout this treatise, the quantity

will be used to explicitly refer to the

order classical moment.

Definition 6 (Center translation of forma) Let be a distribution over the random variable

, and let

be the forma function associated with

. For any constant

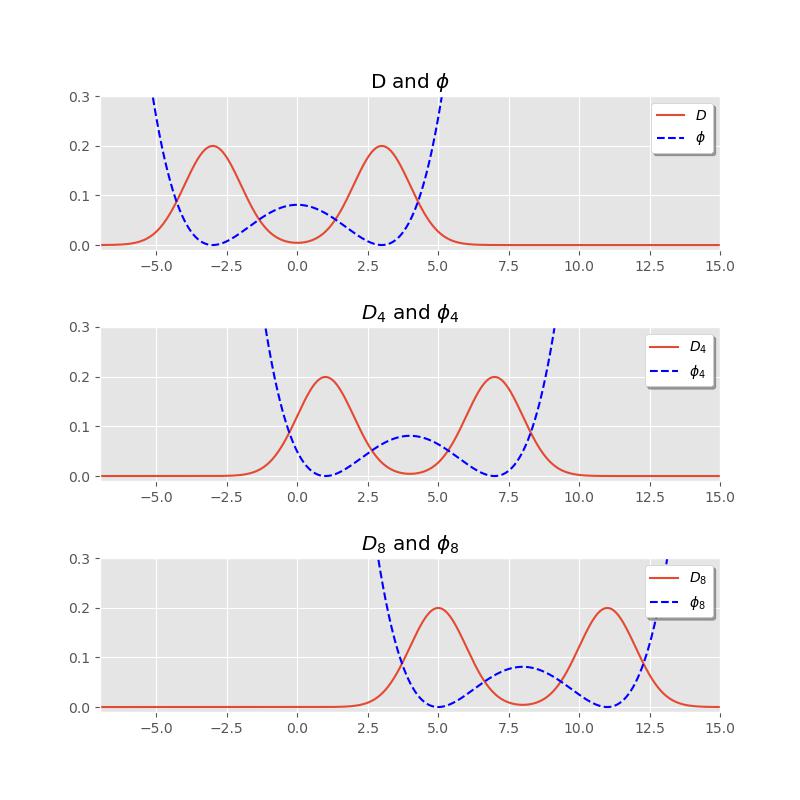

, let the center translation of the forma be

and we denote by the translated distribution, with respect to which the centered pseudovariance is computed via:

This construction allows us to define a modal center of a distribution. For example, if as in our bimodal case, then translating the forma by

yields:

This shifts the modal structure units to the right, allowing pseudovariance to adapt to translated modes.

Interpreting Mixtures (Universally)

Now that we have outlined pseudovariance and the forma function, we should cover the classical interpretation of multimodal distributions, i.e. mixtures, and show how these models can be rephrased in a manner that it is shown they must be members of the exponential family of distributions.

Definition 7 (Mixtures) For , let

be a Gaussian with mean

and variance

. Then we define a mixture of the form:

such that , and

.

In this case we say that has

modes.

Note: The following theorems merit further considerations in the theory of approximations.

Theorem 1. Let be a mixture with

modes. Then there exists a positive semidefinite polynomial

of degree at most

, such that

approximates

up to additive and multiplicative constants.

Theorem 2. (Universal Modal Approximation) Let be the polynomial of the above theorem. Then there exists constants

and

, and function

such that

and

Definition 8 (Dense in a Space)

So then it is worth considering which representation is more expressive? If we let and

be defined as follows:

and

where is the set of positive semidefinite polynomials having degree up to

. There are many criteria for contrasting the ubiquity of these spaces (e.g. computational efficiency), but for now we might ask whether

is dense in

or conversely, for determining which space is more expressive.

Corollary 1. Let be the polynomial from Theorem 1 (i.e. the closest approximator of

). Then we can form the pseudovariance

that is inversely proportional to the constants

and

(from Theorem 2), and conversely. Namely,

and

Additional items (toolbox)

Definition. (Affine Even) The set of functions , such that for any

, there exists some

, such that

is an even function.

Definition. A differentiable function is even if and only if all odd order derivatives vanish at zero.

Reinterpreting Von Mises with Pseudovariance

To better understand the potential applications of pseudovariance, let’s first consider the unimodal case. While the Gaussian distribution provides a degenerate example, where the pseudovariance collapses to classical variance, it offers limited insight into the behavior of our generalization. Thus, we can use a more geometrically nuanced distribution: the Von Mises distribution. Often regarded as the circular analogue of the Gaussian, the Von Mises essentially “wraps” the normal distribution around the unit circle.

Its probability density function is defined as follows:

Links:

OSF Link: